Master the complete spectrum from rapid prototyping to production-ready AI-assisted engineering.

Learn advanced techniques, best practices, and future-proof your development workflow.

By Addy Osmani, an Engineering Leader at Google focused on AI and Developer Experience. He brings 25 years of software engineering experience to his writing.

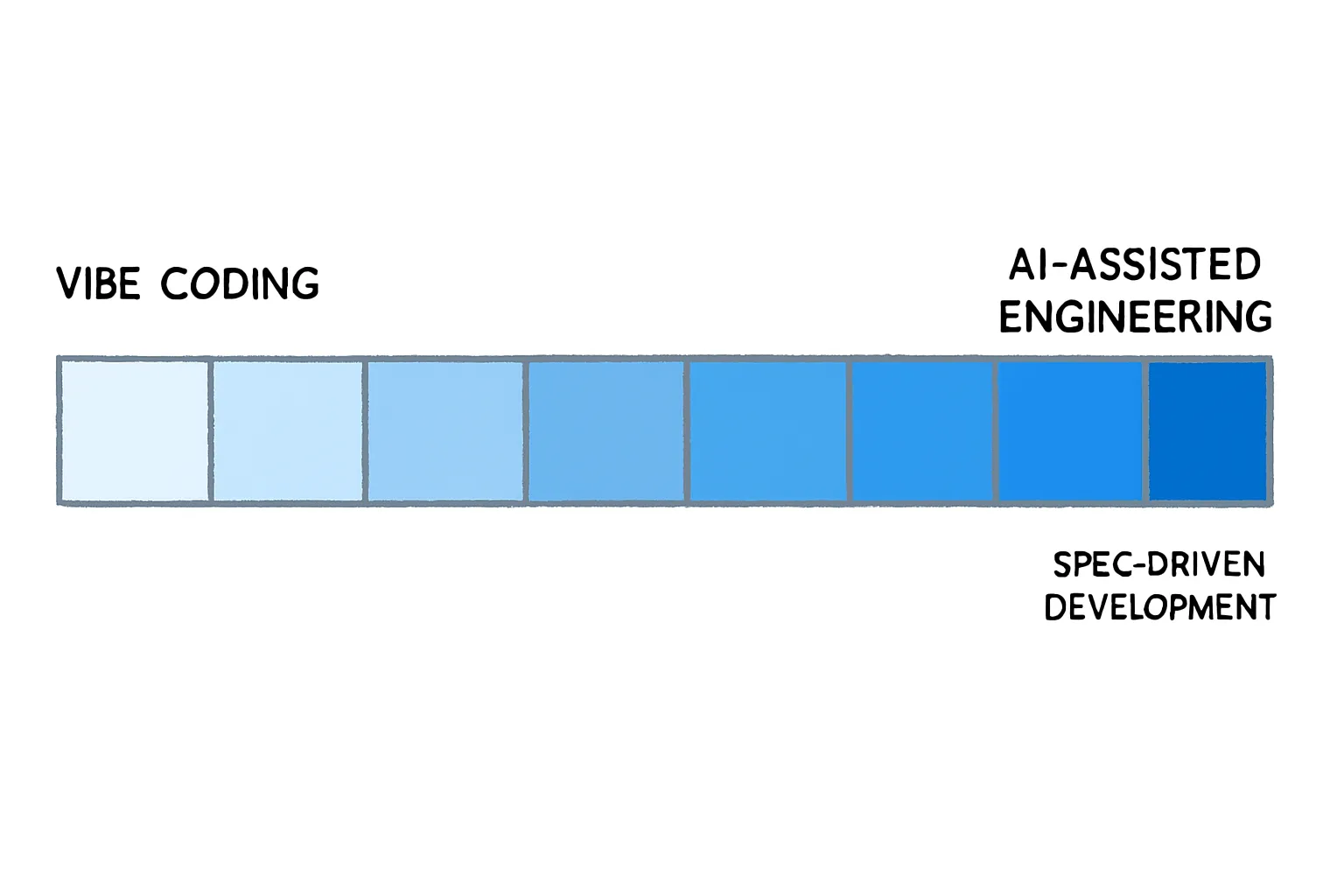

AI-Assisted coding is a spectrum.

What is Vibe Coding?

"Vibe coding" is a playful approach to development that relies on high-level prompting. You give broad instructions, accept AI suggestions, and focus on the overall "vibe" of the project rather than implementation details.

The Andrej Karpathy Vision

Karpathy describes a future where developers interact with AI conversationally, focusing on intent rather than implementation: "I just see stuff, say stuff, run stuff, and copy paste stuff, and it mostly works."

Here's a reality check: The 70% Problem

While vibe coding enables rapid progress (getting 70% of the way to a functional app quickly), the final 30% becomes extremely challenging without deep engineering knowledge:

- Two steps back pattern is where fixing one bug introduces others

- Hidden costs is where requires expertise to ensure maintainability

- Diminishing returns is where AI tools help experienced developers more than beginners

- Security vulnerabilities is where "Vibe coding is fun until you start leaking database credentials"

Important: Vibe Coding ≠ Low Quality

Vibe coding was never meant to describe all AI-assisted coding. It's a specific approach where you don't read the AI's code before running it. There's much more to consider beyond the prototype for production systems.

What is AI-Assisted Engineering?

This goes beyond vibe coding. AI-assisted engineering is a more structured approach that combines the creativity of vibe coding with the rigor of traditional engineering practices. It involves specs, rigor and emphasizes collaboration between human developers and AI tools, ensuring that the final product is not only functional but also maintainable and secure.

The AI-Assisted development spectrum

AI-assisted development is a spectrum that ranges from simple vibe coding to complex, production-ready engineering. Understanding this spectrum helps you choose the right tools and techniques for your project.

Autocomplete

AI editor predicts your next code edits across lines, letting you accept suggestions with the Tab key.

Chatbot

You can ask natural-language questions about your codebase and get context-aware answers in the editor.

Agent

An Agent mode handles multi-step tasks autonomously, staying in the loop and executing changes for you.

A Two-dimensional framework

Understanding AI-assisted development requires a simple but powerful framework with two dimensions: People (technical proficiency) and AI (level of abstraction).

Democratized Software Development

Non-technical people building with advanced AI

Transformed Software Development

Technical people with AI augmentation

Basic Assistance

Non-technical people with limited AI

Enhanced Software Development

Technical people with AI assistance

Enhancing developer capabilities

AI extends existing developer capabilities without radical transformation. Developers maintain expertise to evaluate AI outputs.

- Code completion and suggestions

- Developers can validate quality

- Familiar workflow patterns

Transforming software development

Technical people harness AI for categorically different outcomes. Development workflows and time allocation shift significantly.

- AI brings its own expertise

- Stochastic vs deterministic outcomes

- Requires new trust models

Democratizing who can build software

Non-technical people build software using intuitive, advanced AI. Technology must meet high trust and safety standards.

- Intuitive and user-friendly

- High bar for trust and safety

- Enables new conceptual capabilities

Principles & best practices

Context is Everything

The quality of AI output is directly proportional to the quality and relevance of the context you provide. Without good context, AI produces incorrect, irrelevant, or inefficient code.

What to Include:

- Relevant code files and snippets

- Design documents and schemas

- Error messages and logs

- Examples of desired output

- Constraints and requirements

The Three Pillars of Trust

1. Familiarity

How familiar you are with AI tools and the task influences expectations. As familiarity increases, mental models develop and trust builds.

2. Trust

Earned over time through reliable delivery. Includes both ability (competence) and reliability (consistency).

3. Control

Desired level of control varies with trust and task complexity. Higher trust enables more indirect interaction.

Essential best practices

1. Don't code first - plan first

❌ Bad: "Build me a todo app"

✅ Good: "Give me a few options for a todo app architecture,

starting with the simplest first. Don't code yet - just

outline the approach and ask me which direction to take."

🚀 Best: A mini-PRD or SPEC.md - Define the problem, outline the user journey, and specify the desired outcomes.

9 out of 10 times, AI will suggest a complicated approach that you should ask it to simplify. Always request the plan before implementation.

Use a Plan Mode

Use a Plan Mode to get AI to generate a plan before coding. This helps you understand the architecture and approach before diving into implementation. Pictured is Cline.

Enhance Prompt

Many tools support an "Enhance" prompt to turn a rough idea into a structured spec, iterate on the plan, then build from that plan. Pictured is Bolt.

Write out a SPEC or mini-PRD.

Writing out a SPEC.md or mini-PRD helps clarify requirements and expectations.

2. Provide the right documentation

Check for knowledge cutoff

Ask "Which Tailwind version are you familiar with?" before starting. Many models only know v3 but v4 was released in 2025.

Include relevant documentation

When using specific APIs or frameworks, paste the relevant documentation directly into the AI's context window.

Set global rules

// Example system prompt

Always follow these guidelines:

1. Define the data model before writing code

2. Start with mock data instead of a database

3. Create a component library and split code into multiple files

4. Centralize state management

5. Batch implementation into smaller chunks

6. Double-check you're changing the correct files

7. Ask follow-up questions if requirements are unclear3. Visual context is pretty powerful

Include screenshots when asking AI to create designs or fix bugs. A picture is worth a thousand words and can enable "one-shot" solutions.

Import from Figma

Import Figma designs directly into your AI-Coding tool. This allows for seamless integration of design and code.

Attach an Image

Attach mocks, screenshots or designs to your prompts. This helps AI understand the visual context and generate more relevant code.

Browser Screenshots

Pull in live browser screenshots and context to provide real-time context for your prompts.

4. Test ruthlessly after every change

Critical: No matter what you do, you'll hit situations where AI breaks your app

- Test in localhost after each update

- Open browser console (Cmd+Option+J on Mac) to check for errors

- Small, incremental testing prevents nightmare debugging sessions

5. Create instructions before debugging

❌ Bad: "The button doesn't work"

✅ Good: "I want the Save button to store the form data

and then redirect to the dashboard page. Currently it's

not saving and shows this error: [paste error]"Explicitly state what you're trying to accomplish. This specificity helps AI understand your intention, not just the symptom.

🎯 Prompt Engineering tips

Mastering the art of prompting

Prompt engineering is the discipline of crafting precise, effective instructions for AI models. It's the difference between getting generic responses and receiving tailored, production-ready solutions.

Foundational principles

Provide enough context - garbage in is garbage out

Always assume the AI knows little about your project. Include notes on architecture, libraries, code snippets, and exact error messages.

Why is my code not working?This JavaScript function using React hooks is expected to update the user's profile when the form is submitted, but instead it's throwing "Cannot read property 'name' of undefined". Here's the code:

```javascript

const updateProfile = (userData) => {

setUser(userData.name);

};

```

Error occurs on line 2. Using React 18.2.0.Try to be specific about your goal

Vague questions yield vague answers. Specify the problem precisely with expected vs. actual behavior.

- State the expected behavior clearly

- Describe the current (incorrect) behavior

- Include relevant constraints or requirements

- Specify the desired output format

Break down complex tasks

Divide large, multi-step problems into smaller, iterative chunks for more focused and manageable responses.

Example: Building a user authentication system

- First: "Design the database schema for user authentication"

- Then: "Create the user registration endpoint"

- Next: "Implement password hashing and validation"

- Finally: "Add JWT token generation and verification"

Include examples of inputs and outputs

Use "few-shot prompting" with concrete examples to reduce ambiguity and clarify intent.

Create a function that formats currency values.

Examples:

- formatCurrency(2.5) should return "$2.50"

- formatCurrency(1000) should return "$1,000.00"

- formatCurrency(0.99) should return "$0.99"Leverage roles and personas

Ask the AI to "act as" a specific persona to influence tone, style, and depth of responses.

Effective personas:

- Senior React Developer: "Act as a senior React developer and review my code for potential bugs"

- Performance expert: "You are a JavaScript performance expert. Optimize the following function"

- Security auditor: "Review this authentication code as a security expert"

- Code mentor: "Act like an experienced Python developer mentoring a junior"

Iterate and refine your prompts

Prompt engineering is interactive. Review responses and provide corrective feedback or follow-up questions.

Refinement Strategies:

- "That's a good start, but can you try again without using recursion?"

- "Can you explain why you chose this approach over alternatives?"

- "Please add error handling and input validation"

- "Make this more performant for large datasets"

Scenario-specific strategies for effective prompting

🐛 Debugging code

- Describe symptoms clearly: Explain what the code should do, what it's actually doing, and include exact error messages

- Request line-by-line analysis: "Walk through this function line by line and track the value of the `total` variable"

- Isolate with minimal examples: Reproduce bugs in small, self-contained snippets

- Use code review persona: "Act as a meticulous code reviewer and point out mistakes"

🔧 Refactoring and optimization

- State explicit goals: "Refactor this function to improve readability and reduce code duplication"

- Request explanations: Ask for both refactored code and explanations of changes made

- Use expert mentor persona: "Act like an experienced developer mentoring a junior"

- Specify constraints: "Optimize for speed on large inputs while maintaining readability"

⚡ Implementing new features

- Start high-level: Describe the feature in plain language to get a step-by-step plan

- Provide reference code: Include examples of similar existing components for consistency

- Use inline comments: Write `// TODO:` comments describing needed code

- Define with usage examples: Show how the feature should be used and what it should return

Advanced prompting techniques

Chain of thought prompting

Guide the AI through step-by-step reasoning for complex problems.

Optimize this database query step by step:

1. First, analyze the current query and identify performance bottlenecks

2. Then, suggest specific index optimizations

3. Next, rewrite the query using more efficient joins

4. Finally, explain the expected performance improvement

Current query: [paste query here]Constraint-based prompting

Set clear boundaries and requirements to guide the AI's output.

Effective constraints:

- Technology stack: "Use only vanilla JavaScript, no external libraries"

- Performance: "Solution must handle 10,000+ items efficiently"

- Compatibility: "Must work in Internet Explorer 11"

- Code style: "Follow Google's JavaScript style guide"

Multi-Step validation

Build verification steps into your prompts to catch errors early.

Create a user registration function that:

1. Validates email format

2. Checks password strength

3. Handles duplicate users gracefully

After writing the function:

1. Test it with valid inputs

2. Test it with invalid inputs

3. Verify error messages are user-friendly

4. Check that it follows our existing code patterns🔧 Context Engineering

Building information environments for AI

Context engineering moves beyond crafting clever prompts to constructing complete "information environments" for AI models. Think of it as building a system that assembles the right information at the right time.

The paradigm shift: From prompts to information systems

❌ Traditional Prompting

- Static, one-size-fits-all prompts

- Limited context awareness

- Trial-and-error approach

- Inconsistent results

✅ Context Engineering

- Dynamic information assembly

- Rich, relevant context

- Systematic methodology

- Predictable, high-quality outputs

Core principles of Context Engineering

Be precise and specific

Vague requests lead to vague answers. The more detailed and clear your instructions, the better the output.

Precision in practice:

- Include exact version numbers of frameworks

- Specify coding standards and conventions

- Define success criteria clearly

- Provide complete error messages and stack traces

Dynamic information assembly

Context should be assembled dynamically for each request, pulling in different information based on the query or conversation history.

Dynamic assembly strategies:

- Query-specific code retrieval

- Conversation history summarization

- Contextual documentation fetching

- Adaptive information filtering

Think like an Operating System

Consider the AI model as a CPU and its context window as RAM. Load the necessary information into working memory for each specific task.

Memory management principles:

- Load on demand: Bring in information as needed

- Cache frequently used: Keep common patterns accessible

- Garbage collection: Remove outdated context

- Priority scheduling: Most relevant information first

Practical context engineering strategies

💻 For coding and technical tasks

Relevant code context

Share specific files, folders, or code snippets central to the request.

// Current file: user-service.js

// Related files: user-model.js, auth-middleware.js

// Database schema: users table structure

// Error log: [full stack trace]

// Goal: Fix user authentication bugComplete error information

Always provide full error messages, stack traces, and relevant log entries.

- Full stack trace, not just the error message

- Browser console errors for frontend issues

- Server logs for backend problems

- Network request/response details

Database schemas

For database-related tasks, include schema information to help generate accurate data interaction code.

Include schema details:

- Table structures and relationships

- Column types and constraints

- Indexes and foreign keys

- Sample data for context

PR feedback integration

Use comments and discussions from pull requests as rich context for prompts.

Valuable PR Context:

- Reviewer comments and suggestions

- Discussion threads and decisions

- Code change rationale

- Testing feedback and requirements

📚 Some general best practices

Design documents

Include relevant sections from design documents to provide broader project context.

Examples and templates

Show the AI examples of desired output format or style using "few-shot" prompting.

Clear constraints

List requirements like libraries to use, coding patterns to follow, or things to avoid.

External knowledge

For domain-specific tasks, retrieve information from wikis or API documentation.

Advanced context engineering patterns

🔍 Retrieval-Augmented Generation (RAG)

When tasks require knowledge beyond the model's training data, fetch relevant information from external sources.

RAG implementation steps:

- Query analysis: Understand what information is needed

- Information retrieval: Fetch relevant documents or data

- Context assembly: Combine retrieved info with the prompt

- Response generation: Generate grounded, factual responses

🧠 Memory Management

For long-running tasks, use conversation summarization or external "memory" storage.

Memory management techniques:

- Conversation summarization: Compress long conversations into key points

- Fact extraction: Store important facts in external memory

- Context rotation: Cycle out old information for new

- Hierarchical memory: Organize information by importance and recency

🛠️ Tool integration

Instruct the model on when and how to use external tools, then feed tool outputs back into context.

Tool integration workflow:

- Define available tools and their capabilities

- Provide clear usage instructions

- Execute tool calls based on AI decisions

- Feed tool outputs back into the conversation

- Continue with enriched context

📋 Information formatting

Structure information in a way that's easy for the AI to parse and understand.

Effective formatting techniques:

- Clear headings: Use descriptive section headers

- Bullet points: Break down complex information

- Labeled sections: "Relevant documentation:", "Error logs:", etc.

- Code blocks: Properly formatted code with syntax highlighting

- Structured data: Use tables, lists, and hierarchies

Addressing Context Engineering Challenges

🔄 Context Rot

Problem: As conversations get longer, context becomes cluttered with irrelevant or outdated information.

Solutions:

- Periodically summarize and refresh context

- Remove outdated information systematically

- Implement context pruning strategies

- Use conversation checkpoints

📏 Context window limits to keep in mind

Problem: AI models have finite context windows that can be exceeded with large codebases or long conversations.

Solutions:

- Implement intelligent context selection

- Use hierarchical information organization

- Employ context compression techniques

- Break large tasks into smaller chunks

🎯 Systematic approach

Problem: Moving away from trial-and-error to develop consistent methodologies.

Solutions:

- Create context templates for common scenarios

- Establish information inclusion criteria

- Measure and optimize context effectiveness

- Build reusable context engineering patterns

🖥️ CLI Agents & orchestrators

Claude Code

An agentic coding tool that lives in your terminal, understands your codebase, and helps you code faster by executing routine tasks, explaining complex code, and handling git workflows through natural language commands.

Gemini CLI

An open-source AI agent that brings Gemini 2.5 Pro directly into your terminal with generous free usage limits. Built with extensibility in mind, supporting MCP servers and tools for media generation, web search, and enterprise collaboration workflows.

Amp Code

An agentic coding tool engineered to maximize what's possible with today's frontier models - autonomous reasoning, comprehensive code editing, and complex task execution with no token constraints.

Mastering Command-line AI and multi-agent systems

CLI coding agents and orchestrators represent the cutting edge of AI-assisted development, transforming terminals into powerful development environments and enabling teams of AI agents to work collaboratively on complex projects.

Understanding agent types

🖥️ CLI Coding agents

Single AI agents that work directly in your terminal, transforming command-line interfaces into primary development environments.

Popular CLI Agents:

- Gemini CLI: Google's command-line AI assistant

- Claude Code: Anthropic's terminal-based coding agent

- Aider: AI pair programming in your terminal

🎭 Multi-Agent orchestrators

Systems that coordinate multiple AI agents working together, each with specialized roles and capabilities.

Orchestration platforms:

- Claude Squad: Multiple Claude agents working in parallel

- Conductor: Agent workflow orchestration

- Jules: Asynchronous coding agent with web browsing

CLI Agent best practices

Plan before you prompt

Don't jump straight into complex tasks. Take time to plan your request and provide sufficient context for better results.

Effective planning process:

- Define the end goal clearly

- Break down into logical steps

- Identify required context and files

- Consider potential edge cases

- Plan verification steps

Adopt Spec-driven development

For structured and predictable outcomes, write detailed task breakdowns or "mini-PRDs" for agents to follow.

# User Authentication Feature Spec

## Goal

Implement secure user authentication with JWT tokens

## Requirements

- Email/password login

- Password hashing with bcrypt

- JWT token generation

- Protected route middleware

- Input validation and sanitization

## Acceptance Criteria

- [ ] User can register with email/password

- [ ] User can login and receive JWT token

- [ ] Protected routes verify JWT tokens

- [ ] Passwords are properly hashed

- [ ] Input validation prevents injection attacksConsider embracing TDD - Test-Driven Development

Have the AI write tests first, then generate minimal code to make tests pass. This keeps work focused and verifiable.

TDD with AI workflow:

- Red: AI writes failing tests based on requirements

- Green: AI writes minimal code to pass tests

- Refactor: AI improves code while keeping tests green

- Repeat: Continue cycle for additional features

Utilize sandboxing for safety

Use sandbox features to prevent unintended side effects when experimenting or dealing with risky changes.

Common sandbox commands:

gemini --sandbox- Isolated environment for testingclaude --safe-mode- Conservative change approachgit worktree- Separate working directoriesdocker run --rm- Disposable containers

Leverage checkpointing

Use checkpointing features as an "undo" button to easily revert changes if agents go in the wrong direction.

Checkpointing strategies:

- Before major changes: Create checkpoint before significant modifications

- Feature boundaries: Checkpoint at the completion of each feature

- Experimental branches: Use Git branches as natural checkpoints

- Automated snapshots: Set up automatic checkpointing intervals

Master Command-line functions

Explore built-in commands and features of your CLI agent to maximize productivity.

Useful CLI commands:

/agents- Create specialized sub-agents (Claude Code)/plan- See proposed course of action (Gemini CLI)/diff- Show changes before applying/rollback- Revert to previous state/context- Manage conversation context

Advanced CLI techniques

🔗 Tool integration

CLI agents can be composed with other command-line tools for powerful workflows.

Integration examples:

# Pipe logs to AI for anomaly detection

tail -f /var/log/app.log | gemini "Monitor for errors and anomalies"

# Analyze Git history with AI

git log --oneline | claude "Summarize recent changes and identify patterns"

# Process test results

npm test 2>&1 | aider "Fix failing tests"💰 Cost optimization

Use strategic combinations of free and paid tools to minimize costs while maximizing capabilities.

Cost-effective strategies:

- Planning with free tools: Use Gemini CLI (free, large context) for analysis and planning

- Execution with paid tools: Feed plans to Claude Code for implementation

- Batch processing: Group similar tasks to reduce API calls

- Context reuse: Maintain context across related tasks

🔄 Workflow automation

Create automated workflows that combine CLI agents with traditional development tools.

#!/bin/bash

# Automated code review with AI

# Get changed files

CHANGED_FILES=$(git diff --name-only HEAD~1)

# Run AI code review

echo "$CHANGED_FILES" | xargs cat | claude "Review this code for:

- Security vulnerabilities

- Performance issues

- Code quality problems

- Best practice violations"

# Run automated tests

npm test

# Generate commit message

git diff --cached | gemini "Generate a conventional commit message"Multi-Agent orchestration strategies

🏗️ Multi-Agent architecture

Design systems where multiple AI agents work together, each with specialized roles and capabilities.

Example architecture:

- Planning Agent: Breaks down requirements into tasks

- Coding Agent: Implements features and fixes bugs

- Testing Agent: Writes and runs tests

- Review Agent: Performs code quality checks

- Documentation Agent: Creates and updates docs

🔒 Workspace isolation

Ensure agents work in isolated environments to prevent conflicts and enable parallel work.

Isolation techniques:

- Git branches: Each agent works on separate branches

- Git worktrees: Multiple working directories for same repo

- Docker containers: Completely isolated environments

- Virtual environments: Separate dependency management

📋 Task breakdown

Break large tasks into smaller, manageable sub-tasks that can be assigned to different agents.

E-commerce feature breakdown:

- Agent A: Design database schema for products

- Agent B: Create product API endpoints

- Agent C: Build shopping cart functionality

- Agent D: Implement payment processing

- Agent E: Write comprehensive tests

🎯 Clear instructions

Provide distinct, unambiguous instructions to each agent to ensure clean, predictable results.

Instruction best practices:

- Single responsibility: One clear task per agent

- Explicit dependencies: Define what each agent needs from others

- Success criteria: Clear definition of completion

- Constraints: Specify limitations and requirements

Advanced Orchestration patterns

🔄 Pipeline Orchestration

Create sequential workflows where the output of one agent becomes the input for the next.

CI/CD Pipeline with AI Agents:

pipeline:

- stage: analysis

agent: code-analyzer

task: "Analyze codebase for technical debt and security issues"

- stage: implementation

agent: developer

task: "Fix issues identified in analysis stage"

depends_on: analysis

- stage: testing

agent: tester

task: "Write and run comprehensive tests"

depends_on: implementation

- stage: documentation

agent: documenter

task: "Update documentation based on changes"

depends_on: [implementation, testing]⚡ Parallel processing

Run multiple agents simultaneously on independent tasks to maximize throughput.

Parallel processing strategies:

- Feature parallelism: Different agents work on different features

- Layer parallelism: Frontend and backend development in parallel

- Testing parallelism: Unit, integration, and E2E tests simultaneously

- Platform parallelism: Web, mobile, and API development

🎯 Specialist Agents

Create agents with specific expertise for specialized tasks requiring domain knowledge.

Specialist Agent types:

- Security agent: Focused on security vulnerabilities and best practices

- Performance agent: Optimizes code for speed and efficiency

- Accessibility agent: Ensures WCAG compliance and inclusive design

- Database agent: Specializes in schema design and query optimization

✅ Quality assurance layers

Implement multiple QA checkpoints with different agents reviewing work at various stages.

QA layer structure:

- Code review agent: Reviews code quality and style

- Security review agent: Checks for security vulnerabilities

- Performance review agent: Analyzes performance implications

- Integration test agent: Verifies system integration

- Final review agent: Comprehensive final check

Orchestration best practices

🔍 Verification steps

Ask agents to perform verification steps like compiling and fixing linter errors after completing tasks.

Standard verification steps:

- Code compiles without errors

- All tests pass

- Linter rules satisfied

- Security scans clean

- Performance benchmarks met

📚 Context guidance

Explicitly guide agents to review important documentation and use it as context for their tasks.

Important context sources:

README.md- Project overview and setupCONTRIBUTING.md- Development guidelinesinstructions.md- Specific task instructions- API documentation and schemas

- Architecture decision records (ADRs)

🌐 Web browsing capabilities

Leverage agents that can browse the web for up-to-date information and external documentation.

Web browsing use cases:

- Fetching latest API documentation

- Researching current best practices

- Finding solutions to specific errors

- Checking compatibility information

- Gathering requirements from external sources

🎯 Bounded tasks

Start with well-defined, bounded tasks to build trust and ensure manageable reviews.

Good bounded tasks:

- "Add input validation to the user registration form"

- "Write unit tests for the payment processing module"

- "Optimize the database query in the user search function"

- "Update the API documentation for the new endpoints"

👁️ Human oversight

Always maintain human oversight as the final reviewer and approver of all agent work.

Human oversight responsibilities:

- Review all code changes before merging

- Validate that requirements are met

- Check for subtle errors and edge cases

- Ensure alignment with broader goals

- Make critical judgment calls

Advanced techniques & patterns

Model Context Protocol (MCP)

MCP is an open protocol that allows AI coding tools to connect to external services, data, and actions in a structured, secure way. Think "USB-C for AI integrations". It enables AI-assisted engineering workflows where models can pull real-time context, run tests, or fetch project-specific knowledge directly from standardized MCP servers.

MCP Visualized

MCP sits between your AI assistant and your tools, acting as a universal adapter. The AI sends requests via MCP, which routes them to connected servers and returns structured responses the AI can act on.

Playwright MCP

An MCP server that lets AI tools run a browser, interact with it and perform automated browser tests on demand. It enables workflows like "test this PR in Chrome and Firefox" directly from your AI chat, returning pass/fail reports, screenshots, and logs without manual setup.

MCP Marketplace

A marketplace for MCP servers in Cline where developers can share and discover reusable MCP integrations. It allows teams to quickly find and deploy standardized services, like database access or API clients, that can be used across different AI coding tools.

Critique-driven development

Use code review feedback directly as prompts. This technique leverages specific, actionable feedback to generate targeted improvements.

// Original review comment:

"This function is doing too much. Consider extracting the validation

logic into a separate function and add proper error handling."

// Transformed into AI prompt:

"Refactor this function to extract validation logic into a separate

function. Add proper error handling with try-catch blocks and

meaningful error messages. Follow single responsibility principle."Iterative refinement atterns

Master the art of progressive improvement through structured iteration cycles.

Initial generation

Start with a broad, high-level prompt

Targeted refinement

Identify specific areas for improvement

Contextual enhancement

Add constraints and examples

Validation & testing

Test and validate the output

Advanced prompting strategies

Few-Shot prompting

Provide examples of desired output format and style

// Example: API client generation

"Generate API client functions following this pattern:

Example:

async function getUser(id) {

try {

const response = await fetch(`/api/users/${id}`);

if (!response.ok) throw new Error('Failed to fetch user');

return await response.json();

} catch (error) {

console.error('Error fetching user:', error);

throw error;

}

}

Now create similar functions for: getPosts, createPost, updatePost"Chain-of-Thought prompting

Guide AI through step-by-step reasoning

"Before writing the code, let's think through this step by step:

1. First, identify what data we need to fetch

2. Then, determine the best data structure to store it

3. Consider error cases and edge conditions

4. Finally, implement with proper error handling

Now, walk me through your reasoning for each step,

then provide the implementation."Role-Based prompting

Set context by defining AI's expertise and perspective

"You are a senior full-stack developer with 10 years of experience

in React and Node.js, specializing in performance optimization

and security best practices.

Given this React component that's experiencing performance issues

with large datasets, suggest optimizations while maintaining

accessibility and security standards."Constraint-based prompting

Define clear boundaries and requirements

"Create a user authentication system with these constraints:

- Must use TypeScript

- No external authentication libraries

- Include input validation

- Maximum 200 lines of code

- Must handle password hashing securely

- Include comprehensive error messages"Cost-Benefit analysis framework

Learn to evaluate when to accept AI assistance based on complexity, familiarity, and potential impact.

High Benefit, Low Cost

Ideal Zone - Accept immediately

- Code completion

- Boilerplate generation

- Unit test creation

- Documentation

High Benefit, High Cost

Careful Evaluation - Worth the investment

- Complex refactoring

- Architecture decisions

- Performance optimizations

- Security implementations

Low Benefit, Low Cost

Optional - Use for learning

- Simple formatting

- Comment generation

- Variable renaming

- Basic explanations

Low Benefit, High Cost

Avoid - Not worth the time

- Over-engineered solutions

- Unfamiliar patterns in critical code

- Complex UI without clear requirements

Context management strategies

Advanced techniques for providing optimal context to AI systems.

Progressive context building

// Start with core context

#file src/types/User.ts

#file src/api/userService.ts

// Add related context as needed

#file src/components/UserProfile.tsx

#openfiles // Include all currently open files

// Provide specific constraints

"Following the existing patterns in userService.ts,

create a new function to handle user preferences."Schema-driven development

Include database schemas, API specifications, and type definitions

// Include TypeScript interfaces

interface User {

id: string;

email: string;

preferences: UserPreferences;

createdAt: Date;

}

// Include API endpoint documentation

// POST /api/users

// Body: { email: string, password: string }

// Returns: { user: User, token: string }

"Create a user registration component that follows

this exact schema and API contract."Error-Driven Context

Use error messages and stack traces as context for debugging

// Include full error with context

"I'm getting this error:

TypeError: Cannot read properties of undefined (reading 'map')

at UserList.jsx:25:8

at Array.map (<anonymous>)

Here's the component code:

[paste component]

And here's the API response format:

[paste example response]

The error happens when the API returns an empty array.

How should I handle this case?"Production-ready AI-assisted development

⚠️ The security reality check

"Vibe coding is fun until you start leaking database credentials in the client." This real-world example shows why production deployment requires more than just functional code.

Production principles

Always review AI-generated code

Treat AI-generated code like code from a junior developer. It needs careful review and testing before submission.

- Check for security vulnerabilities

- Verify error handling

- Validate performance implications

- Ensure maintainability standards

Have a comprehensive testing strategy

AI can help generate tests, but you must verify coverage and quality.

// AI-generated test example

"Generate comprehensive unit tests for this user authentication function.

Include tests for:

- Valid credentials

- Invalid credentials

- Network errors

- Malformed input

- Edge cases like empty strings

- Security scenarios like SQL injection attempts

Use Jest and follow our existing test patterns in /tests/auth/"Security-first development

AI can introduce security vulnerabilities. Always validate security practices.

Security validation checklist:

- ✅ Input validation and sanitization

- ✅ Authentication and authorization

- ✅ SQL injection prevention

- ✅ XSS protection

- ✅ Sensitive data handling

- ✅ API key and credential management

- ✅ HTTPS and secure communications

Performance & scalability

AI might generate functional but inefficient code. Always consider performance.

// Performance-focused prompting

"Optimize this database query for a table with 1M+ records.

Consider:

- Proper indexing strategies

- Query execution plan

- Memory usage

- Connection pooling

- Caching opportunities

Explain your optimization choices and include before/after

performance comparisons."AI across the Software Development Lifecycle (SDLC)

Professional AI-assisted engineering integrates AI tools systematically across all phases of development.

📋 Planning & Design

- AI planning collaborators

- Design document generation

- Task breakdown and estimation

- Architecture recommendations

💾 Coding & Implementation

- Code completion and generation

- Refactoring assistance

- Migration support

- Documentation generation

🧪 Testing & Quality Assurance

- Automated test generation

- Test coverage analysis

- Bug detection and fixing

- Code quality improvements

🚀 Deployment & Operations

- Build automation

- Deployment configuration

- Monitoring setup

- Performance optimization

Quality Gates for AI-Generated Code

Implement systematic quality controls to ensure AI-generated code meets production standards.

🔍 Code Review Checklist

🧪 Testing Requirements

Unit Tests

Every AI-generated function needs corresponding unit tests

Integration Tests

Test AI-generated components in context with existing systems

Security Tests

Validate security controls and input handling

Performance Tests

Ensure generated code meets performance requirements

The Future of AI-Assisted Development

What does the road ahead look like?

We're moving from simple code completion to intelligent agents that can reason, plan, and execute complex development tasks autonomously while maintaining human oversight and control.

Some emerging trends

Autonomous Agents

AI agents that can take complex actions across entire codebases, reason about system architecture, and implement features end-to-end.

Examples in development:

- Cline's autonomous Chrome interaction

- Multi-agent debugging systems

- Automated migration tools

- Self-healing production systems

Visual development

AI systems that can see, understand, and interact with user interfaces to provide more contextual assistance.

Current capabilities:

- Visual element inspection (Bolt, Lovable)

- Screenshot-to-code generation

- UI debugging with visual feedback

- Design-to-implementation workflows

Reasoning & planning

Advanced models that can break down complex problems, create detailed plans, and execute multi-step solutions.

Capabilities:

- Architecture decision making

- Performance optimization strategies

- Security vulnerability assessment

- Technical debt prioritization

Continuous learning

AI systems that learn from your codebase, team practices, and feedback to provide increasingly personalized assistance.

Personalization areas:

- Team coding standards

- Architecture preferences

- Performance patterns

- Security requirements

A future development workflow

Imagine a development experience where AI agents handle routine tasks, provide intelligent suggestions, and enable developers to focus on high-level problem solving and creative solutions.

Intent definition

Describe what you want to build at a high level. AI understands context, requirements, and constraints from natural language.

Intelligent planning

AI generates detailed technical plans, considers architecture decisions, and suggests optimal implementation strategies.

Autonomous implementation

AI agents implement features across multiple files, handle integrations, and generate comprehensive tests.

Intelligent review

AI provides detailed code review, security analysis, and performance optimization suggestions.

Automated deployment

AI manages deployment pipelines, monitors performance, and provides optimization recommendations.

Preparing for the future

The models available today are the "worst" they'll ever be. Here's how to future-proof your skills and workflows.

🧠 Mindset shifts

- From coding to curating: Focus on providing context, validating outputs, and making architectural decisions

- From implementation to intent: Become expert at expressing what you want rather than how to build it

- From solo to collaborative: Learn to work with AI as a pair programming partner

- From reactive to proactive: Use AI to anticipate problems and optimize before issues arise

🛠️ Skill development

- Prompt engineering: Master the art of clear, contextual communication with AI

- AI tool fluency: Stay current with evolving AI development tools and capabilities

- Critical evaluation: Develop strong skills in reviewing and validating AI-generated code

- System thinking: Focus on architecture, design patterns, and high-level problem solving

🔧 Workflow evolution

- Iterative development: Embrace rapid prototyping and incremental improvement

- Context management: Develop systems for organizing and providing relevant context to AI

- Quality gates: Implement robust review and testing processes for AI-generated code

- Continuous learning: Stay adaptable as AI capabilities expand rapidly

Your next steps

The future of software development is being written now. Here's how to get started and continue your journey beyond vibe coding.

🚀 Start today

- Choose one AI tool from this guide and build your first project

- Implement the core best practices: plan first, provide context, test ruthlessly

- Practice advanced prompting techniques on real problems

- Set up quality gates and review processes

📈 Level Up (Next 30 days)

- Build a production application using AI assistance throughout the lifecycle

- Experiment with different tools for different use cases

- Develop your personal prompting style and context management system

- Share learnings with your team and start collaborative AI-assisted development

🎯 Master Level (Next 90 days)

- Implement AI assistance across your entire development workflow

- Develop custom prompts and workflows for your specific domain

- Contribute to the AI-assisted development community

- Stay current with emerging tools and techniques